Unit 5: Curricular Evaluation

5.1. Concept, Need for Curricular Evaluation

5.2. Factors associated with Curricular Evaluation (Learner, Content, Instructor and Resources)

5.3. Areas of Curricular Evaluation: Context, Input, Process and Product

5.4. Methods and Tools for Curricular Evaluation

5.5. Challenges in Curricular Evaluation

5.1. Concept, Need for Curricular Evaluation

Curriculum evaluation is an essential component in the process of adopting and implementing any new curriculum in any educational setting. Its purpose is to decide whether or not the newly adopted curriculum is producing the intended results and meeting the objectives that it has set forth. Another purpose of curriculum evaluation is to gather data that will help in identifying areas in need of improvement or change.

As with most terms in the curriculum, there are a variety of definitions given to evaluation. Simply described, it is a process of establishing the extent to which the objectives of a program have been achieved by analyzing performance in given areas. Thus, evaluation is a judgmental process aimed at decision-making. Doll (1992) also defines evaluation as a broad and continuous effort to inquire into the effects of utilizing educational content and process to meet clearly defined goals.

Yet another definition states that “evaluation is a process of collection and provision of data for the sake of facilitating decision making at various stages of curriculum development.” (Shiundu & Omulando, 1992)

Assessment, Measurement, and Testing Relating to Evaluation

There is often confusion between the terms: assessment, measurement, and evaluation. The following descriptions help define the differences.

Assessment

Assessment is the process that shows whether there has been a change in student’s performance in a certain academic area. The change revealed through assessment can be given a value by quantifying procedures referred to as educational measurement. Assessments include the full range of procedures used to gain information about student learning and the formation of value judgments concerning learning progress.

Measurement

Measurement is the means of determining the degree of achievement of a particular objective or competency. For example, the outcomes for individual students are measured (using assessments) to see if the student has met the outcomes. Measurement refers to the determination of the actual educational outcomes and comparing these with intended outcomes as expressed in the objectives of the program. Measurement describes something numerically. There is currently a focus on the measurement of 21st century skills for students.

Evaluation

In the above context, evaluation is the process of making a value judgment based on the information gathered through measurement and testing. Evaluation of a curriculum occurs so that the developers can accept, change, or eliminate various parts of a curriculum. The goal of evaluation is to understand whether or not the curriculum is producing the desired results for students and teachers. Evaluation is a qualitative judgement.

Monitoring versus Evaluation

Monitoring

What is monitoring? It is a continuous review of the progress of planned activities. Put differently, it is the routine daily, weekly, or monthly assessment of ongoing activities and progress. Monitoring focuses on what is being done. It is centered on two questions:

- Is the curriculum project reaching the specified target population?

- Are the various practices and intervention efforts undertaken as specified in the curriculum project design?

Monitoring is important in examining the inputs and outputs. Indeed, it can be considered as a “process evaluation.” Monitoring thus helps to ensure that the implementation is on course.

Evaluation

Evaluation in relation to monitoring is the episodic assessment of the overall achievement. It examines what has been achieved, or what impact has been made. Evaluation also examines the gaps in the curriculum in addition to what may have been achieved by the students but wasn’t.

Why is Curriculum Evaluation Necessary?

There are several parties, or stakeholders, interested in the process and results of curriculum evaluation.

- Parents are interested because they want to be assured that their children are being provided with a sound, effective education.

- Teachers are interested because they want to know that what they are teaching in the classroom will effectively help them cover the standards and achieve the results they know parents and administration are expecting.

- The general public is interested because they need to be sure that their local schools are doing their best to provide solid and effective educational programs for the children in the area.

- Administrators are interested because they need feedback on the effectiveness of their curricular decisions.

- Curriculum publishers are interested because they can use the data and feedback from a curriculum evaluation to drive changes and upgrades in the materials they provide.

Purpose of curriculum evaluation

Educational prepares future generation to take their due place in the society. It becomes essential that substandard educational goals, materials and methods of instruction are not retained but up-dated in consonance with the advances in social cultural & scientific field. It is also important to ascertain how different educational institutions and situations interpret a given or prescribed curriculum. Hence, arises the need for curriculum evaluation.

Curriculum evaluation monitors and reports on the quality of education. Cronbach (1963) distinguishes three types of decisions for which evaluation is used.

1. Course Improvement : deciding what instructional material and methods are satisfactory and where changes are needed.

2. Decisions about individuals : Identifying the needs of the pupil for the sale of planning of instruction and grouping, acquainting the pupil with his own deficiencies.

3. Administrative regulations : Judging how good the school system is, how good individual teachers are. The goal of evaluation must be to answer questions of selection, adoption, support and worth of educational materials and activities. It helps in identifying the necessary improvements to be made in content, teaching methods, learning experiences, educational facilities, staff-selection and development of educational objectives. It also serves the need of the policy makers, administrators and other members of the society for the information about the educational system.

4. Community – What are the attitudes and inputs of the community to the curriculum and the curriculum development process?

Objectives of Curriculum Evaluation

1. To determine the outcomes of a programme.

2. To help in deciding whether to accept or reject a programme.

3. To ascertain the need for the revision of the course content.

4. To help in future development of the curriculum material for continuous improvement.

5. To improve methods of teaching and instructional techniques.

Thus, curriculum evaluation refers to the process of collecting data systematically to assess the quality, effectiveness, and worthiness of a program. The process of curriculum development and implementation raises issues like:

- What are the objectives of the program?

- Are these objectives relevant to the needs of the individual and society?

- Can these objectives be achieved?

- What are the methods being used to achieve these objectives?

- Are the methods the best alternatives for achieving these objectives?

- Are there adequate resources for implementing a curriculum?

Certain terms are closely related to evaluation. These include assessment, measurement, and testing.

5.2. Factors associated with Curricular Evaluation (Learner, Content, Instructor and Resources)

After a curriculum is developed, the curriculum committee can breathe a sigh of relief, but their work is not done. Only when the curriculum is implemented and then evaluated will the committee know to what extent their efforts were successful. It is fair to say that no curriculum is perfect because there are almost always factors that may influence the curriculum that were unknown during the development process.

The criteria for evaluating the curriculum generally includes alignment with the standards, consistency with objectives, and comprehensiveness of the curriculum. Relevance and continuity are also factors. Many assessments do not cover the entire range of objectives due to difficulty in assessing some of the objectives effectively and objectively (e.g. the affective domain where value traits such as integrity and honesty are tested through written exams). The psychomotor domain, which helps our brain coordinate physical task such as catching a ball, have objectives that are often inadequately tested due to difficulties in logistics. Even with the cognitive domain, the knowledge involving the development of intellectual skills, only a small portion is usually tested. However, a lot of effort is made to try and ensure quality examinations at least at the summative evaluation level through a vigorous process of developing exams, which go through several stages including group analysis, etc.

Comprehensiveness

From Curriculum Studies, pp. 92-93

All the objectives of the curriculum are evaluated. Often only the cognitive domain is tested through the recall of facts. To test for comprehensiveness, one could carry out an evaluation of the broad administrative and general aspects of the education systems to find out how good the education system is and how relevant the program is.

- Evaluation pertaining to course improvement is determined through assessment of instructional methods and instructional materials to establish those that are satisfactory and those which are not.

- Evaluation related to individual learners will identify their needs and help to devise a better plan for the learning process.

- Feedback to the teachers can shed light on how well they are performing.

Validity

This criterion answers the question, “Do the evaluation instruments used (e.g. examinations and tests) measure the function they are intended to measure?”

Reliability

Reliability provides a measure of consistency with respect to time (i.e. reliable instruments give the same results when administered at different times).

Continuity

Evaluation is a continuous process; an integral part of the curriculum development process and classroom instruction. Hence, to provide continuous feedback on weaknesses and strengths for remedial action to be taken.

Another set of widely shared evaluation criteria that are applicable in any field are relevance, efficiency, effectiveness, impact, and sustainability.

Relevance

Relevance indicates the value of the intervention or program with others. Stakeholder needs, state and national priorities, international partners’ policies, including development goals.

Efficiency

Efficiency answers the question, “Does the program use the resources in the most effective way to achieve its goals?”

Effectiveness

Effectiveness pertains to the question, “Is the activity achieving satisfactory results in relation to stated objectives?”

Impact

Impact focuses on the results of the intervention (intended and unintended; positive and negative) including social, economic, and environmental effects on individuals, institutions, and communities.

Sustainability

Education provides the way each generation passes on its culture, discoveries, successes and failures to the next generations. If there is not adequate inter-generational education, knowledge and accomplishments cannot be sustained. Education is the foundation for formulating, challenging and disseminating ideas, knowledge, skills and values within communities, nations and also globally.

5.3. Areas of Curricular Evaluation: Context, Input, Process and Product

The CIPP evaluation model emphasizes “learning-by-doing” to identify corrections for problematic project features. It is thus uniquely suited for evaluating emergent projects in a dynamic social context (Alkin, 2004). As Stufflebeam has pointed out, the most fundamental tenet of the model is “not to prove, but to improve” (Stufflebeam & Shinkfield, 2007, p. 331). The proactive application of the model can facilitate decision making and quality assurance, and its retrospective use allows the faculty member to continually reframe and “sum up the project’s merit, worth, probity, and significance”.

To service the needs of decision makers, the Stufflebeam model provides a means for generating data relating to four stages of program operation: context evaluation, which continuously assesses needs and problems in the context to help decision makers determine goals and objectives; input evaluation, which assesses alternative means for achieving those goals to help decision makers choose optimal means; process evaluation, which monitors the processes both to ensure that the means are actually being implemented and to make the necessary modifications; and product evaluation, which compares actual ends with intended ends and leads to a series of recycling decisions.

During each of these four stages, specific steps are taken:

• The kinds of decisions are identified.

• The kinds of data needed to make those decisions are identified.

• Those data are collected.

• The criteria for determining quality are established.

• The data are analyzed on the basis of those criteria.

• The needed information is provided to decision makers.

The context, input, process, product (CIPP) model, as it has come to be called, has several attractive features for those interested in curriculum evaluation. Its emphasis on decision making seems appropriate for administrators concerned with improving curricula. Its concern for the formative aspects of evaluation remedies a serious deficiency in the Tyler model. Finally, the detailed guidelines and forms created by the committee provide stepby-step guidance for users.

The CIPP model, however, has some serious drawbacks associated with it. Its main weakness seems to be its failure to recognize the complexity of the decision-making process in organizations. It assumes more rationality than exists in such situations and ignores the political factors that play a large part in these decisions. Also, as Guba and Lincoln (1981) noted, it seems difficult to implement and expensive to maintain.

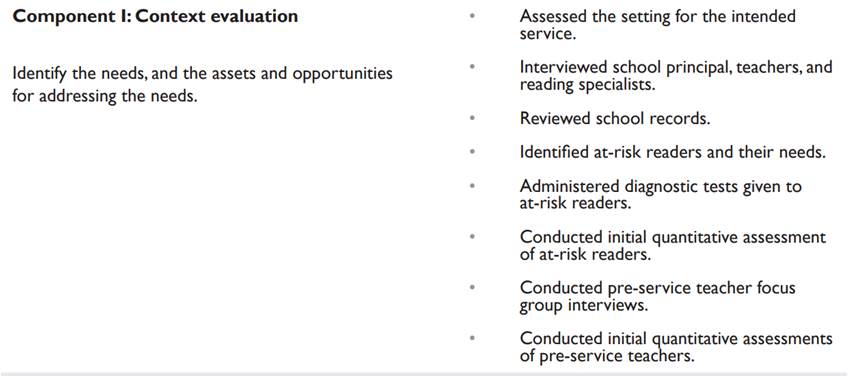

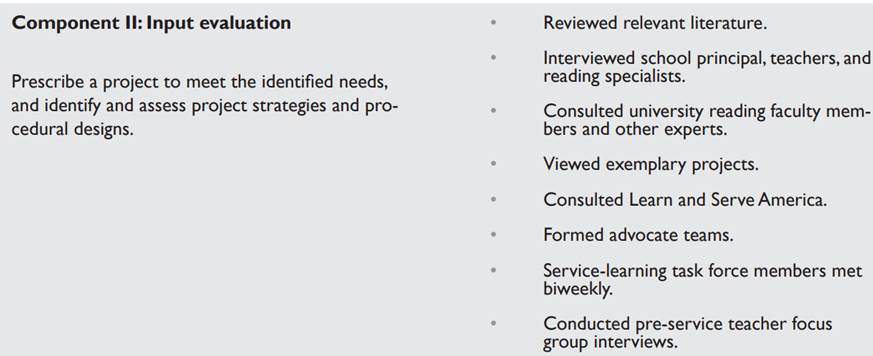

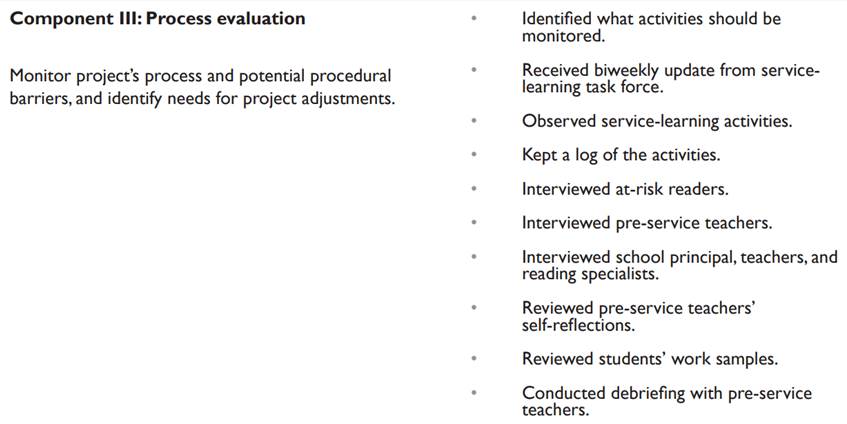

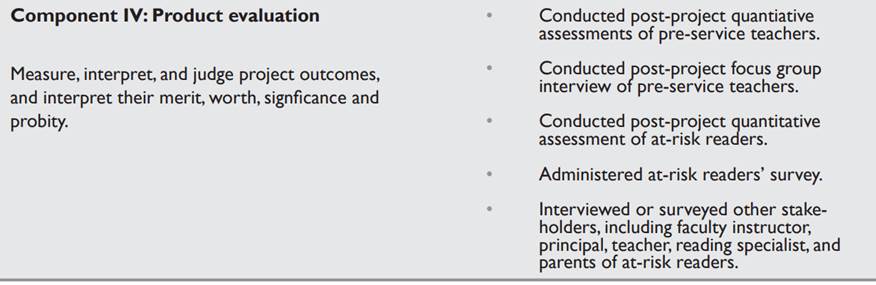

The Four Components

All four components of Stufflebeam’s CIPP evaluation model play important and necessary roles in the planning, implementation, and assessment of a project. According to Stufflebeam (2003), the objective of context evaluation is to assess the overall environmental readiness of the project, examine whether existing goals and priorities are attuned to needs, and assess whether proposed objectives are sufficiently responsive to assessed needs. The purpose of an input evaluation is to help prescribe a program by which to make needed changes. During input evaluation, experts, evaluators, and stakeholders identify or create potentially relevant approaches. Then they assess the potential approaches and help formulate a responsive plan. Process evaluation affords opportunities to assess periodically the extent to which the project is being carried out appropriately and effectively. Product evaluation identifies and assesses project outcomes, both intended and unintended.

Context evaluation is often referred to as needs assessment. It asks, “What needs to be done?” and helps assess problems, assets, and opportunities within a defined community and environmental context (Stufflebeam & Shinkfield, 2007). According to the authors, the objective of context evaluation is to define the relevant context, identify the target population and assess its needs, identify opportunities for addressing the needs, diagnose problems underlying the needs, and judge whether project goals are sufficiently responsive to the assessed needs. The methods for the context evaluation include system analyses, surveys, document reviews, secondary data analyses, hearings, interviews, diagnostic tests, and the Delphi technique.

The context evaluation component addresses the goal identification stage of a service-learning project. An effective servicelearning project starts with identifying the needs of service providers (students) and the needs of the community. Many pitfalls are associated with needs assessments. Most can be attributed to the failure of adequate identification and articulation, in advance, of crucial indicators (e.g., purpose, audience, resources, and dissemination strategies). Application of the context evaluation component of the CIPP evaluation model could potentially prevent these pitfalls.

Input evaluation helps prescribe a project to address the identified needs. It asks, “How should it be done?” and identifies procedural designs and educational strategies that will most likely achieve the desired results. Consequently, its main orientation is to identify and assess current system capabilities, to search out and critically examine potentially relevant approaches, and to recommend alternative project strategies. The result of the input evaluation step is a project designed to meet the identified needs. The success of a servicelearning project requires a good project plan that, if implemented correctly, will benefit both service providers (students) and service recipients (community members). Methods used to execute an input evaluation include inventorying and analyzing available human and material resources, proposed budgets and schedules, and recommended solution strategies and procedural designs. Key input evaluation criteria include a proposed plan’s relevance, feasibility, superiority to other approaches, cost, and projected cost-effectiveness. Literature searches, visits to exemplary projects, employment of advocate teams, and pilot trials are all appropriate tools to identify and assess alternative project approaches. Once a project plan is developed, it can be evaluated (using techniques such as cost analyses, logic models, Program Evaluation and Review Techniques [PERT], and various scales) according to the criteria that were identified in the input evaluation step.

Process evaluation monitors the project implementation process. It asks, “Is it being done?” and provides an ongoing check on the project’s implementation process. Important objectives of process evaluation include documenting the process and providing feedback regarding (a) the extent to which the planned activities are carried out and (b) whether adjustments or revisions of the plan are necessary. An additional purpose of process evaluation is to assess the extent to which participants accept and carry out their roles.

Process evaluation methods include monitoring the project’s procedural barriers and unanticipated defects, identifying needed in-process project adjustments, obtaining additional information for corrective programmatic changes, documenting the project implementation process, and regularly interacting with and observing the activities of project participants (Stufflebeam & Shinkfield, 2007). Process evaluation techniques include on-site observation, participant interviews, rating scales, questionnaires, records analysis, photographic records, case studies of participants, focus groups, self-reflection sessions with staff members, and tracking of expenditures.

Process evaluation can be especially valuable for servicelearning projects because (a) it provides information to make on-site adjustments to the projects, and (b) it fosters the development of relationships between the evaluators (in this case, the two task force members in research and evaluation methodology) and the clients/stakeholders that are based on a growing collaborative understanding and professional skill competencies, which can promote the project’s long-term sustainability.

Product evaluation identifies and assesses project outcomes. It asks, “Did the project succeed?” and is similar to outcome evaluation. The purpose of a product evaluation is to measure, interpret, and judge a project’s outcomes by assessing their merit, worth, significance, and probity. Its main purpose is to ascertain the extent to which the needs of all the participants were met.

Stufflebeam and Shinkfield (2007) suggest that a combination of techniques should be used to assess a comprehensive set of outcomes. Doing so helps cross-check the various findings. A wide range of techniques are applicable in product evaluations, and includes logs and diaries of outcomes, interviews of beneficiaries and other stakeholders, case studies, hearings, focus groups, document/records retrieval and analysis, analysis of photographic records, achievement tests, rating scales, trend analysis of longitudinal data, longitudinal or cross-sectional cohort comparisons, and comparison of project costs and outcomes.

Providing feedback is of high importance during all phases of the project, including its conclusion. Stufflebeam and Shinkfield (2007) suggest the employment of stakeholder review panels and regularly structured feedback workshops. They stress that the communication component of the evaluation process is absolutely essential to assure that evaluation findings are appropriately used. Success in this part of the evaluation requires the meaningful and appropriate involvement of at least a representative sample of stakeholders throughout the entire evaluation process.

Product evaluation used in service-learning projects can serve at least three important purposes. First, it provides summative information that can be used to judge the merits and impacts of the service-learning project. Second, it provides formative information that can be used to make adjustment and improvement to the project for future implementation. Third, it offers insights on the project’s sustainability and transportability, that is, whether the project can be sustained long-term, and whether its methods can be transferred to different settings.

5.4. Methods and Tools for Curricular Evaluation

Types of Curriculum Evaluation

According to Scriven, following are the 3 main types

1. Formative Evaluation. It occurs during the course of curriculum development. Its purpose is to contribute to the improvement of the educational programme. The merits of a programme are evaluated during the process of its development. The evaluation results provide information to the programme developers and enable them to correct flaws detected in the programme.

2. Summative Evaluation. In summative evaluation, the final effects of a curriculum are evaluated on the basis of its stated objectives. It takes place after the curriculum has been fully developed and put into operations.

3. Diagnostic Evaluation. Diagnostic evaluation is directed towards two purposes either for placement of students properly at the outset of an instructional level (such as secondary school),or to discover the underlying cause of deviancies in student learning in any field of study.

Techniques Of Evaluation :

A variety of techniques are employed. Questionnaire, checklist, interview, group discussions evaluation workshops and Delphi techniques are the major one.

a) Observation : It is related to curriculum transaction. Observation schedule helps the evaluator to focus his attention on the aspects of the process that are most relevant to his investigation. This method gains credibility when it contains both subjectives and objective methods. Interviews and feed-back and other documentary evidences may supplement observations.

b) Questionnaire : It is used to obtain reaction of curriculum users namely pupils, teachers, administrators, parents and other educational workers concerning various aspects of prescribed curriculum are to be ascertained

c) Check-list : It can be used as a part of questionnaire and interview. It provides numbers of responses out of which most appropriate responses are to be checked by the respondent.

d) Interview : It is a basic technique of evaluation and for gathering information. It may be formal or informal in nature. The information required should be suitably defined and the presentation of questions should in no case betray and sort of bias the part of the interviewer. e) Workshops & Group discussion : In this technique, experts are invited at one place to deliberate upon syllabi, materials etc; and to arrive at a consensus regarding the quality of the same. The materials may be evaluated against a set of criteria that might have been prepared by the evaluator

Approaches of Curriculum Evaluation

Various models of curriculum evaluation have been developed by different experts. We shall discuss four important models of curriculum evaluation in this section.

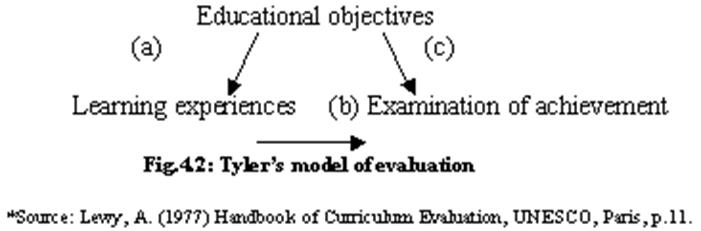

1. Tyler's

Model

Probably the best known model of curriculum evaluation is proposed by Tyler

(1950) who described education as a process in which three different foci should

be distinguished. They are educational objectives, learning experiences, and

examination of achievements. Tyler’s model is shown schematically in the

following figure

In above, evaluation of this type is represented by the arrow marked with

letter (c). This model is primarily used to evaluate the achievement level of

either individual learners or of a group of learners. The evaluators working

with this model are interested in the extent to which learners are developed in

the desired way. Both cognitive and affective domains are given importance in

this model.

In the Tyler’s model, the relationship between educational objectives and

learner achievement constitutes only a portion of the model. The systematic

study of the other relationship is also described in the model. The arrow (b)

refers to the correspondence between the objectives and the learning

experiences suggested in the curriculum and realized in the actual school

situation. Arrow (L) refers to the examination of the relationship between the

actual learning experiences and educational outcomes.

2. Shake’s

Countenance Model

Stake (1969) explained curriculum evaluation in terms of ‘antecedents’,

‘transactions’ and ‘outcomes’. Let us first understand these terms. The term

‘antecedents’ refers to those aspects in which curriculum is taught, such as:

time available and the other sources provided. The term ‘transactions’ refers

to what actually happens in lessons, including what is done by both the

teachers and learners. The term ‘outcomes’ connotes learner’s achievements, the

effects of the curriculum on the attitudes of the students, as well as

teacher's feelings about teaching the curriculum.

This model is known as countenance model because different people look at the

curriculum and appraise it accordingly.

Stake’s evaluation model is explained as below:

|

Terms |

Kind on Information |

Methods |

|

Antecedents |

*

Organisational background |

*

Time table |

|

Transactions(in lessons) |

Teachers : |

*

Activity Records |

|

Outcomes |

*

Pupil’s achievements |

*

Test and written work |

3. The

CIPP Model

Stufflebeam (1971) proposed CIPP model stressing the need for attention to

context (c), Input (i), Process (p), and Product (pr). The first three terms

refer to formative evaluation, while the product refers to summative

evaluation. Let us discuss each of the terms, used by Stufflebeam, below.

Content evaluation

: Here the curriculum evaluator is engaged in studying the environment

(context) in which the curriculum is transacted. It provides the rationale for

selection of objectives. Content evaluation is not a one-time activity. It is a

continuous process for furnishing baseline information for the operations of

the total system.

Input evaluation

: The purpose of input evaluation is to get information for how to utilize

resources optimally to meet the objectives of the curriculum. It includes evaluation

of some sort of physical and non-physical inputs such as availability of

physical and human resources, time and budget. It also includes previous

achievement, education and aspirations of pupils.

Process evaluation

: This is the most critical component of the overall model. Quality of the

product largely depends on this component. It addresses the curriculum

implementation decisions. Stufflebeam presents the following three strategies

for process evaluation:

· To detect or predict defects in the procedural design or its implementation during the diffusion stages: In dealing with plan or curriculum defects, we should identify and monitor continually the potential sources for the failure of the curriculum. The source may be logistical, financial, etc.

· To provide information for curriculum decisions: Here, we should make decisions regarding test development prior to the actual implementation of the curriculum. Some decisions may require that certain in-service activities be planned and carried out before the actual implementation of the curriculum.

· To maintain a record of procedures as they occur: It addresses the main features of the project design; for example: the particular content selected, the instructional strategies planned, or the time allotted to the planing for such activities.

4. Hilda Taba Model

Hilda Taba’s Social Studies Model emphasizes on the cause and effect

relationship in the curriculum process. The evaluation process is based on

experimental control over the study materials and its effect on the achievement

of the students. The researcher prepares different sets of study materials,

each set having certain variations from the other. The materials are exposed to

different groups of students. After exposition, the curriculum is evaluated.

The outcomes of curriculum evaluation will determine the principles of

developing the new programmes

Curriculum evaluation monitors and reports on the quality of education. Cronbach (1963) distinguishes three types of decisions for which evaluation is used.

1. Course Improvement: deciding what instructional material and methods are satisfactory and where changes are needed.

2. Decisions about individuals: Identifying the needs of the pupil for the sale of planning of instruction and grouping, acquainting the pupil with his own deficiencies.

3. Administrative regulations: Judging how good the school system is, how good individual teachers are. The goal of evaluation must be to answer questions of selection, adoption, support and worth of educational materials and activities. It helps in identifying the necessary improvements to be made in content, teaching methods, learning experiences, educational facilities, staff-selection and development of educational objectives. It also serves the need of the policy makers, administrators and other members of the society for the information about the educational system.

When evaluating courses of instruction, most medical educators focus on three specific areas: Program, Process, and Participants.

Program evaluation involves a critical look at the content, goals, objectives, and evaluation methods of a course. The usual tool utilized is a questionnaire completed by the students at the end of their rotation in which different aspects of instruction and student experiences are evaluated. The results of this questionnaire are often the only evaluation utilized to make changes in curriculum content and in curriculum implementation.

- Program evaluation tools

- Review of goals and objectives for relevance

- Review of teaching quality (direct observation and questionnaire)

- Student admission data

- AAMC surveys

- Accreditation reports

- Alumni surveys

- Curriculum mapping

Process evaluation refers to the analysis of the way the program is implemented. Questions to ask here include: 1. What characteristics of the learner are stressed: knowledge, problem-solving, self-learning, cost consciousness, or cultural sensitivity? 2. Do students receive feedback on their performance, and when?3. What is the quality of the teaching and how is it measured? 4. What is the quality of the textbook(s) used?5. How much does the faculty become involved in decision making?6. Is the focus of the curriculum knowledge, skills, or attitudes?An often overlooked aspect of process evaluation is whether or not faculty teaching time is considered valuable and rewarded.Standardized questionnaires are available that will, in general, look at aspects of curriculum implementation and the learning environment2.Modifications of the above source with inclusion of particular details from the individual clerkship can provide similar but much less biased data than that obtained by the previous example.

- Process evaluation tools

- Questionnaires to assess attitudes

- Direct observations of the learning environment

- Interviews with faculty and students

- Debriefing sessions with students at end of course

- Clinical logs of patient encounters

- Review of test questions for validity and reliability

Participant evaluation includes an analysis of the attitudes and performance of students and faculty. Questions to ask are: How satisfied are participants with the curriculum?What is the performance (knowledge, skill acquisition and attitudes) of students who finish the course?What are the faculty views on teaching and how do they see themselves as teachers (facilitator, lecturer, mentor)?What is the amount of faculty time devoted to teaching?Measurement of the outcomes of graduates is also a part of participant evaluation.A review of all graduates’ career choices, level of preparedness of interns in pediatrics, recent drop-out rates, certification and re-certification results, practice types and locations, and practice surveys can all be used to measure outcomes of the curricular plan.

- Participant evaluation tools

- Objective and subjective testing of students

- Grade distributions

- Feedback sessions for students and faculty

- Peer evaluation

- Career differentiation

- Outcome studies

- Attitudes toward social responsibility

There are many tools or instruments used in evaluation process. Some of the tools have been briefly discussed here:

Questionnaire

Most commonly used method of evaluation is questionnaire in which an individual

attempts answers in writing on a paper. It is generally self-administered

in which person goes through the questionnaire and responds as per the

instruction. It is considered to be the most cost-effective tool of evaluation

in terms of administration. While developing teacher should ensure that it is

simple, concise, and clearly stated. Evaluation done with the help of

questionnaire is quantitative.

Interview

Interview is the second most important technique used for evaluation in which

students participating in evaluation are interviewed. Interview can help in

getting information both quantitatively and qualitatively. Interview can be

conducted in group or individually. It is a time-consuming process; therefore

it should be arranged as per the convenience of interviewer and interviewee. It

can also be used to evaluate a programme at the time of exist of student called

exit interview. Interview should be held in a quiet room and the information

obtained should be kept confidential. An interview guide can be created, which

is an objective guideline to be followed by the interviewer.

Observations

Observation is the direct visualization of the activity performed by the

student. It is very useful in assessing the performance of the students, to

know how many skills they have attained. Observation is needed to be recorded

simultaneously, if delayed some important points of the observation could be

missed. There is scope for subjectivity in observation and the same can be

overcome by developing an objective criterion. Students should also be aware of

the criteria, so that they can prepare themselves accordingly and their anxiety

levels will be controlled. Teacher should also prepare himself to enable fair

assessment.

Rating Scale

Rating scale is another tool of assessment in which the performance of the

student is measured on a continuum. Rating scale provides objectivity to the

assessment. Later on, grades can be given to the students based on their

performance on rating scale.

Checklist

Checklist is a two-dimensional tool used to assess the behavior of the student,

for its presence or absence. Teacher can evaluate the performance of the

student with a detailed checklist of items and well-defined and developed

criteria. Checklist is an important tool that can evaluate students'

performance in the clinical area. Order in which steps to be used to complete

the procedure can be put in sequential order, which help the teacher to check

whether the required action is carried out or not. It is an important tool used

in both summative and formative assessment

Attitude Scale

An attitude scale measures the feeling of the students at the time of answering the question. Likert scale is the most popular. Attitude scale contains a group of statements (usually 10-15) that reflect the opinion on a particular issue. Participant (student) is asked the degree to which he agrees or disagrees with the statements. Usually, five point Likert scale is used to assess the attitude of the student. To avoid any kind of bias, equal number of positively and negatively framed statements is included.

Semantic Differential

Another scale used to measure the attitude of the student is semantic

differential. This tool contains bipolar scale (adjectives) like good-bad,

rich-poor, positive-negative, active-passive, etc. Number of intervals between

two adjectives is usually old like five or seven, so that the middle figure

represents neutral attitude.

Self-Report or Diary

A self-report or diary is a narrative record maintained by the student, which

reflects his critical thoughts after careful observation. It can be a one-time

assignment or regular assignment. Regular assignment is maintained in a spiral

book which can be evaluated on daily, weekly, monthly or semester basis.

Self-report or diary helps in improving any existing programme or constructing

a new one based on self-report submitted by the student.

Anecdotal Notes

Anecdotal record is the note maintained by teacher on the performance or

behavior of student during clinical experience. It proves to be a very valuable

tool for both formative and summative evaluation of the student's performance.

It is maintained soon after the occurrence of event. It is an assessment done

on continuous basis that allows student to be judged fairly. It is the duty of

the teacher to give feedback to the student.

SELECTION OF EVALUATION TOOL

Process of evaluation requires careful selection of evaluation tool. There are

some guidelines to be followed while selecting the evaluation tool. Points to

be observed while selecting evaluation tool are given as under:

- Appropriate: The tool should be able to measure what it intends to measure.

- Appropriate: The tool should be appropriate for the domain to be measured.

- Comprehensive: The tool should be able to evaluate all variable to be studied.

- Valid and reliable: The tool should be pretested, reliable and valid.

- Cost-effective: The tool should not be very costly to use.

- Time-saving: The tool should not be very lengthy.

5.5. Challenges in Curricular Evaluation

Lack of Time

Nursing faculty often makes complaints/excuses of lack of time for not

evaluating the students regularly. Lack of time may be a result of poor time management

skills, hence faculty must try to overcome this barrier; still, if it is not

manageable then they can hire external evaluators from outside to manage the

affairs so that, this core activity of the education will not be jeopardized.

They must consider that evaluation is as important as delivering lectures or

demonstrating skills to the nursing students.

Lack of the skills to Carry Out Evaluation

Some teachers may not be competent enough to plan and execute the evaluation

schedule. These teachers must be identified by the principal of the college and

remedial actions, e.g. in-service education, refresher courses, etc., can be

planned for those who are in need.

Error in Measurement and Evaluation

There are various sources of errors in measurement. One of the important errors

in measurement is the respondent himself. He himself may not be able to express

his true feelings. Measurer's behavior, style and looks of the person measuring

the phenomenon, is another sources of error that may distort the process of

measurement. Other factors that can contribute to error in measurement are

situational factors. Poor quality of test or defective measuring instrument is

another factor responsible for error in measurement.

Evaluation of the curriculum is a critical phase in the curriculum-development process. Even if all the steps are followed in the development process, it is when the curriculum is implemented that it becomes clear whether or not the objectives have been met and to what degree the students have made progress academically. This is a meaningful, but complicated process.