Unit 1: Concept of assessment

1.1. Definition and meaning of screening, assessment, evaluation, testing and measurement.

1.2. Assessment for diagnosis and certification – intellectual assessment, achievement, aptitude and other psychological assessments.

1.3. Developmental assessment and educational assessment – entry level, formative and summative assessments.

1.4. Formal and informal assessment – concept, meaning and role in educational. settings. Standardised/Norm referenced tests (NRT) and teacher made/informal Criterion referenced testing (CRT).

1.5. Points to consider while assessing students with developmental disabilities.

1.1 Definition and meaning of screening, assessment, evaluation, testing and measurement.

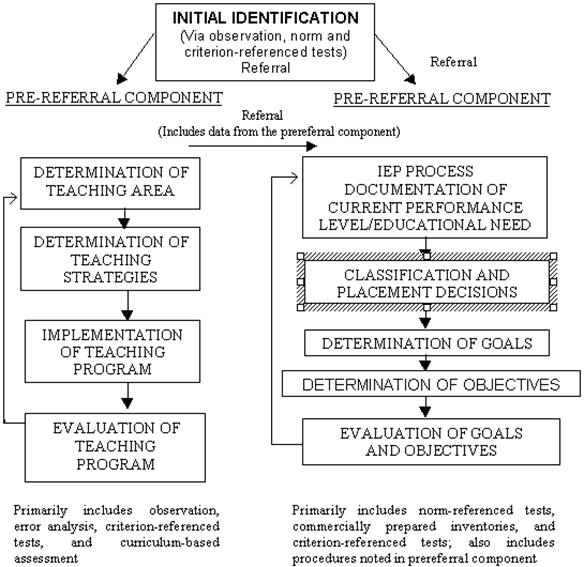

Assessment is a continuous process for understanding individual and programming required services for him. It involves collection and organization of information for specifying and verifying problems and for making decisions about a student.

The decision may include a wide spectrum ranging from screening and identification to the evaluation of teaching plan.

The selection of assessment tools and methods vary depending on the purpose for the assessment is carried out.

Wallace, Larsen, & Elkinson-1992 - “Assessment refers to the process of gathering and analyzing information in order to make instructional, administrative, guidance decision for an individuals.”

Why Assessment?

Taylor (1981) answers by explaining the stages of assessment

— Stage 1 – To screen and identify those students with potential problems.

— Stage 2 – To determine and evaluate the appropriate teaching programme and strategies for particular student.

— Stage 3 – To determine the current level of functioning and educational needs of a student

Purpose of assessment

Any one who is involved in assessment process should know clearly the purpose for which he is conducting the assessment. Knowing this is very important as it decides the type of assessment tools and means of gathering information for decision making.

For example, if the purpose is only for screening and identification, we use a short screening schedule, for programme planning we use a checklist which helps in assessing the current performance level and selection of content for teaching.

There are many purposes of assessment. They are:

1. Initial screening and identification,

2. determination and evaluation of teaching programmes and strategies (pre-referral intervention),

3. determination of current performance level and educational need,

4. decisions about classification and programme placement,

5. development of individual educational programmes (including goals, objectives and evaluation procedures).

6. evaluation of the effectiveness of the Individualized Educational Programme.

Initial screening and identification

· The students who require special attention or special educational services are initially identified through assessment procedures. The procedures involve either informal procedures such as observation or error analysis or formal procedures such as achievement or intelligence tests. In other words, assessment is used to identify the children who warrant further evaluation.

· Assessment is also used to screen children who are considered to be “high risk” for developing various problems. These children would not have yet developed deficiencies requiring special education, but they do exhibit certain behaviours that suggest problems in future. Identifying such children allows continuous monitoring of problem areas and designing of stimulation programme if required to prevent the problem.

Assessment for initial identification purpose, therefore is used to identify individual who might need further detailed assessment or who might develop problems in future. Further, it identifies individuals who with some type of immediate remedial programme might be able to cope with the problem.

Evaluation of teaching programme

and strategies (pre-referral)

One of the important roles of assessment is to determine appropriate programme

and strategies. For this purpose, information is used in four ways.

- First, prior to the referring of a student to special education programme, it can assist regular teacher in determining what to teach and the best method to teach.

- Second, it serves as a method of evaluating the effectiveness of the particular teaching programme or strategy. Many a time a formal referral for special education can be avoided if assessment information is used in this way. That is assessment information can be used to develop and evaluate pre-referral intervention programming. For example, a student X is getting poor marks in subjects as he makes a lot of spelling mistakes. Before making a formal referral to special education services, thinking that the student may be learning disabled, the regular teacher may assess and analyze the work product (spelling errors) of the student and provide a remediation programme. If student shows progress, further referral to special education services can be avoided.

- Third, in determining appropriate programmes and strategies, assessment can provide pre-referral information to document the need for a formal referral. As explained above, if pre-referral intervention fails to remediate the spelling problem, then there is a need for referring the student for special education programmes.

- Fourth, the pre-referral intervention information can be incorporated into the individual education programme for student who are eligible for and who ultimately receive special education.

Determining of current

performance level and educational need

The assessment of current performance level of a student in subjects or skills

is essential to state the need for special education programme. This

information helps the teacher or examiner.

- to identify subject(s) or skill(s) that need special assistance.

- to identify strengths and weaknesses of students.

- to select appropriate strategies and procedures.

Decision about classification and programme placement: The assessment data is used for classification and placement of students with special needs in appropriate special educational programmes. Theoretically, individuals are classified to indicate similarities and relationships among their educational problems and to provide nomenclature that facilitates communication within the field (Taylor, 1993). Based on assessment information students are classified and suitable placement decisions are made. For example, a 6 year old child who is diagnosed to have mental retardation needs a placement in special education programme which provides education to children with mental retardation.

Development of the Individualized Educational Programme: The most important use of assessment information is to determine the goals and objectives, and strategies to teach children who are identified to have special educational needs. As each individual child’s needs are different, we have to plan educational programme that meets the needs. A systematically planned individualized educational programme is a blueprint for teachers to follow.

Evaluation of the effectiveness of the Individualized Educational Programme: Evaluation procedures are also specified in Individualized Educational Programme along with goals, objectives, methods and materials. Using these procedures, the teacher has to periodically monitor the progress made by the student. The monitoring of the programme gives feedback (positive or negative) to both teacher and student. Based on the type of feed back, the teacher either changes her plan or continues the same plan or select a new activity. For example, on periodic evaluation if the child shows improvement, the teacher will continue with her plan, if no improvement is shown she may have to make changes in IEP.

Assessment is a continuous process

Assessment

Assessment is a process by which information is obtained relative to some known objective or goal. Assessment is a broad term that includes testing. A test is a special form of assessment. Tests are assessments made under contrived circumstances especially so that they may be administered. In other words, all tests are assessments, but not all assessments are tests. We test at the end of a lesson or unit.

Assessment originated most recently of all of the terms, in 1956. It was, and is, used in education jargon to mean “determination of value.”

We assess

progress at the end of a school year through testing, and we assess verbal and

quantitative skills through such instruments as the SAT and GRE. Whether

implicit or explicit, assessment is most usefully connected to some goal or

objective for which the assessment is designed. A test or assessment yields

information relative to an objective or goal. In that sense, we test or assess

to determine whether or not an objective or goal has been obtained. Assessment

of skill attainment is rather straightforward. Either the skill exists at some

acceptable level or it doesn’t. Skills are readily demonstrable. Assessment of

understanding is much more difficult and complex. Skills can be practiced;

understandings cannot. We can assess a person’s knowledge in a variety of ways,

but there is always a leap, an inference that we make about what a person does

in relation to what it signifies about what he knows. In the section on this

site on behavioral verbs, to assess means To stipulate the conditions by which

the behavior specified in an objective may be ascertained. Such stipulations

are usually in the form of written descriptions.

Evaluation is perhaps the most complex and least understood of the terms. Inherent in the idea of evaluation is "value." When we evaluate, what we are doing is engaging in some process that is designed to provide information that will help us make a judgment about a given situation.

Evaluation originated in 1755, meaning “action of appraising or valuing.”

It is a technique by which we come to know at what extent the objectives are being achieved. It is a decision making process which assists to make grade and ranking.

According to Barrow and Mc Gee: It is the process of education that involves collection of data from the products which can be used for comparison with preconceived criteria to make judgment.

Nature of Evaluation

· It is systematic process

· It is a continuous dynamic process Identifies strength and weakness of the program

· Involves variety of tests and techniques of measurement

· Emphasis on the major objective of an educational program

· Based upon the data obtained from the test

· It is a decision making process

Generally, any

evaluation process requires information about the situation in question. A

situation is an umbrella term that takes into account such ideas as objectives,

goals, standards, procedures, and so on. When we evaluate, we are saying that

the process will yield information regarding the worthiness, appropriateness,

goodness, validity, legality, etc., of something for which a reliable

measurement or assessment has been made. For example, I often ask my students

if they wanted to determine the temperature of the classroom they would need to

get a thermometer and take several readings at different spots, and perhaps

average the readings. That is simple measuring. Teachers, in particular, are

constantly evaluating students, and such evaluations are usually done in the

context of comparisons between what was intended (learning, progress, behavior)

and what was obtained.

A test or an examination (or "exam") is an assessment intended to measure a test-takers knowledge, skill, aptitude, or classification in many other topics (e.g., beliefs). In practice, a test may be administered orally, on paper, on a computer, or in a confined area that requires a test taker to physically perform a set of skills. The basic component of a test is an item, which is sometimes colloquially referred to as a "question." Nevertheless, not every item is phrased as a question given that an item may be phrased as a true/false statement or as a task that must be performed (in a performance test). In many formal standardized tests, a test item is often retrievable from an item bank.

Test originated in the 1590s, meaning “trial or examination to determine the correctness of something.”

According to Barrow and McGee: A test is a specific tool or procedure or a technique used to obtained response from the students in order to gain information which provides the basis to make judgment or evaluation regarding some characteristics such as fitness, skill, knowledge and values.

Nature of Test

· The test is reliable

· The test is valid

· It is objective

· Must accomplish with norms

· Should not be expensive

· Less time consuming

· Must produce results and its implementation

· Its feasibility

· Must have educational values

Test may be called as tool, a question, set of question, an

examination which use to measure a particular characteristic of an individual

or a group of individuals. It is something which provides information

regarding individual’s ability, knowledge, performance and achievement.

A test may vary in rigor and requirement. For

example, in a closed book test, a test taker is often required to rely upon

memory to respond to specific items whereas in an open book test, a test taker

may use one or more supplementary tools such as a reference book or calculator

when responding to an item. A test may be administered formally or informally.

An example of an informal test would be a reading test administered by a parent

to a child. An example of a formal test would be a final examination

administered by a teacher in a classroom or an I.Q. test administered by a

psychologist in a clinic. Formal testing often results in a grade or a test score. A test score may be interpreted with regards to a norm or criterion,

or occasionally both. The norm may be established independently, or by statistical analysis of a large number of participants. A formal test that is standardized is one that is administered and scored in a consistent

manner to ensure legal defensibility. A standardized test with important

consequences for the individual test taker is referred to as a high

stakes test. Standardized tests are often used

in education, professional

certification, counseling, psychology,

the military, and many other fields.

Examination

Examination originated in the 1610s, meaning “test of knowledge.”

Exams and tests are a great way to assess what the students have learned with regards to particular subjects. Exams will show what part of the lesson each student seems to have taken the most interest in and has remembered.

With

every pupil being so individual, exams are also a great way for teachers to

find out more about the students themselves. The test environment comes with

added stress, which allows teachers to work out how their students argue and

how they think individually by their works, which is a great attribute for them

to keep in mind for future class activities.

Measurement

Measurement refers to the process by which the attributes or dimensions of some physical object are determined. One exception seems to be in the use of the word measure in determining the IQ of a person. The phrase, "this test measures IQ" is commonly used. Measuring such things as attitudes or preferences also applies. However, when we measure, we generally use some standard instrument to determine how big, tall, heavy, voluminous, hot, cold, fast, or straight something actually is.

It is the collection of information in numeric form It is the record of performance or the information which is required to make judgment.

According to R.N. Patel: Measurement is an act or process that involves the assignment of numerical values to whatever is being tested. So it involves the quantity of something.

Nature of Measurement

· It should be quantitative in nature

· It must be precise and accurate (instrument)

· It must be reliable

· It must be valid

· It must be objective in nature

Standard instruments refer to instruments such as

rulers, scales, thermometers, pressure gauges, etc. We measure to obtain

information about what is. Such information may or may not be useful, depending

on the accuracy of the instruments we use, and our skill at using them. There

are few such instruments in the social sciences that approach the validity and

reliability of say a 12" ruler. We measure how big a classroom is in terms

of square feet, we measure the temperature of the room by using a thermometer,

and we use Ohm meters to determine the voltage, amperage, and resistance in a

circuit. In all of these examples, we are not assessing anything; we are simply

collecting information relative to some established rule or standard.

Assessment is therefore quite different from measurement, and has uses that

suggest very different purposes. When used in a learning objective, the

definition provided on the ADPRIMA for the behavioral verb measure is: To apply

a standard scale or measuring device to an object, series of objects, events,

or conditions, according to practices accepted by those who are skilled in the

use of the device or scale.

To sum up, we measure distance, we assess

learning, and we evaluate results in terms of some set of criteria. These three

terms are certainly connected, but it is useful to think of them as separate

but connected ideas and processes.

1.2 Assessment for diagnosis and certification – intellectual assessment, achievement, aptitude and other psychological assessments.

Assessment is a process of collecting data for the purpose of making decisions. Assessment provides us with baseline information for intervention, whereas the evaluation is the assessment of outcome of an intervention. In clinical practice, therefore, we need both assessment and evaluation methods. The purpose of the assessment is as follows:

a. To identify the condition based on specific criteria and to establish that it is a clinical entity that requires appropriate mental health services and placement decisions

b. To identify and treat etiological factors and risk factors for ID

c. To identify the needs implicated by the condition and design a program plan to reduce the disability impact

d. To match the nature and needs of the conditions effectively with the best intervention methods available

e. To evaluate the effectiveness of intervention.

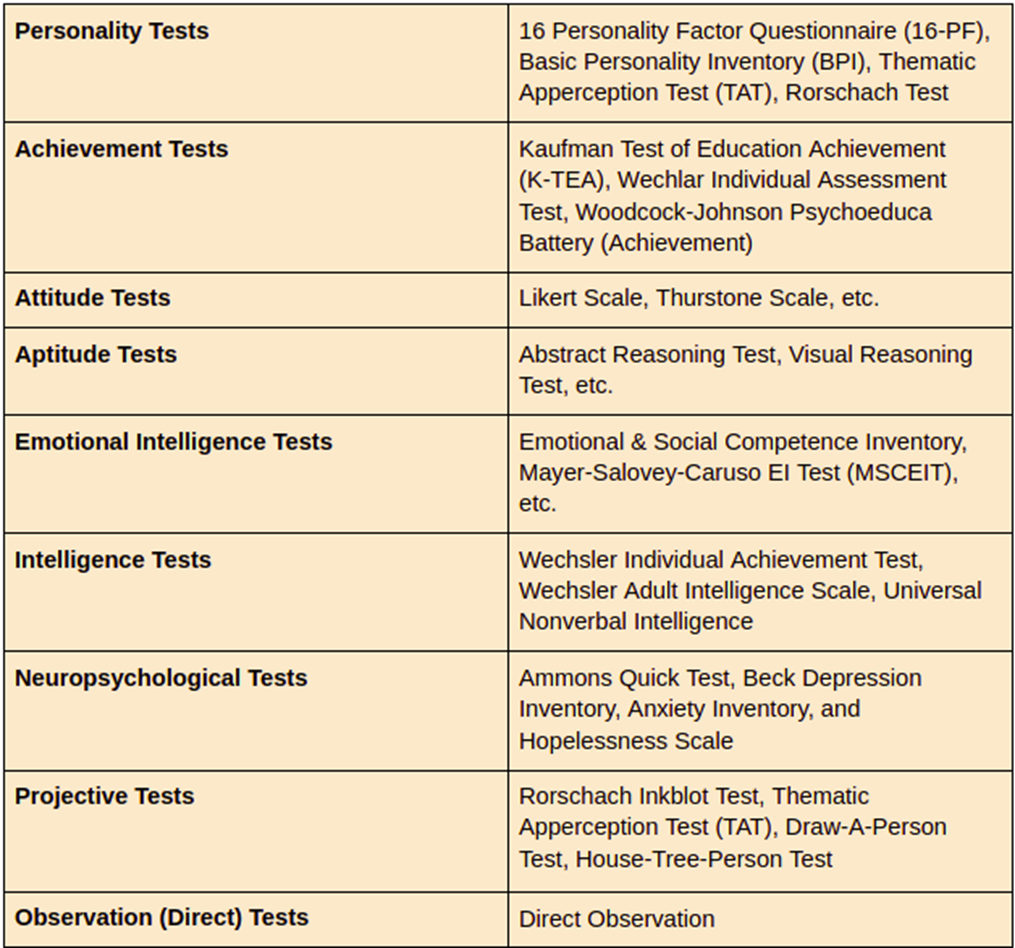

Psychological Assessments or Psychological Tests are verbal or written tests formed to evaluate a person’s behaviour. Many types of Psychological tests help people understand various dynamics of the human being. It helps us understand why someone is good at something, while the other is good at another. However, Humans are complex beings which can’t be defined and classified under certain branches. The subjective nature of humans and individual differences has quite often raised criticism in psychological testing.

The classification of the types of psychological tests is as follows:

- As per the nature of psychological tests in terms of standardized and non-testing method of testing

- As per the functions of psychological tests such as intelligence tests, personality tests, interest inventories, aptitude tests, etc.

Intellectual assessment has changed a great deal in the twentieth century. It has moved from assessments based on language and speech patterns to sensory discrimination, with most early assessments being for the mentally deficient. Gradually, the assessment instruments developed into the precursors of the standardized instruments used in the 1990s, which measure more complex cognitive tasks for all levels of cognitive ability. The most commonly used tests, the Wechsler scales, were not developed out of theory, but were guided rather by clinical experience. In the progression of test development, the relative alternates to the Wechsler have been more theory-based. The direction in test development in the 1990s seems to be continuing to lead to theory being at the base of intelligence tests, instead of being just purely clinically driven. Psychometric theories and neurological theories are growing as the basis for new instruments. However, despite this proliferation of new theory-driven tests, there is a definite conservatism that holds on to the past, reluctant to let Wechsler tests be truly rivaled. Part of this hold on the past is research-based and part is clinically-based. Because the Wechsler tests have been reigning supreme for so long, there has been a mountain of research studies using the Wechsler scales. Thus, clinicians have a good empirical basis to form their understanding of what a specific Wechsler profile may be indicating. Clinically, psychologists are also quite comfortable and familiar with the Wechsler scales. A good clinician who has done many assessments may be familiar enough with every nook and cranny of the WAIS-R to barely need the manual to administer it. Thus, the field so far has changed relatively slowly. Computer based technology is likely going to ultimately shape the field of assessment by 2020. Computer scoring programs and computer assisted reports are already in use, and the future is likely to include a much greater progression of technologically advanced instruments for assessing intelligence.

Here are the major types of intelligence tests:

- Wechsler Individual Achievement Test

- Woodcock Johnson III Tests of Cognitive Disabilities

- Wechsler Adult Intelligence Scale

- Stanford-Binet Intelligence Scale

- Peabody Individual Achievement Test

- Universal Nonverbal Intelligence

- Differential Ability Scales

Achievement tests are used to assess a test-taker’s knowledge in certain academic areas. Considering the word achievement, that is precisely what these kinds of test measure. An achievement test will measure a student's achievement or mastery of content, skill or general academic knowledge.

An achievement test measures how an individual has learned over time and what the individual has learned by analyzing his present performance. It also measures how a person understands and masters a particular knowledge area at the present time. With this test, you can analyze just how quick and precise an individual is in performing the tasks that they consider an accomplishment.

An achievement test is an excellent choice to analyze and evaluate the academic performance of an individual.

For instance, every school requires its students to show their proficiency in a variety of subjects.

In most cases, the students are expected to pass to some degree to move to the next class. An achievement test will record and evaluate the performance of these students to determine how well they are performing against the standard.

The primary aim of an achievement test is to evaluate an individual. An achievement test however can start an action plan.

An individual may get a higher achievement score that shows that the person has shown a high level of mastery and is ready for an advanced level of instruction. On the other hand, a low achievement score might indicate that there are concerned areas that an individual should improve on, or that a particular subject should be repeated.

For example, a student can decide to start a study plan because of the result of an achievement test. So it can serve as a motivation to improve or an indicator to proceed to a higher level. An achievement test is used in both the educational sector and in the professional sector.

Aptitude test, examination that attempts to determine and measure a person’s ability to acquire, through future training, some specific set of skills (intellectual, motor, and so on). The tests assume that people differ in their special abilities and that these differences can be useful in predicting future achievements.

General, or multiple, aptitude tests are similar to intelligence tests in that they measure a broad spectrum of abilities (e.g., verbal comprehension, general reasoning, numerical operations, perceptual speed, or mechanical knowledge). The Scholastic Assessment Test (SAT) and the American College Testing Exam (ACT) are examples of group tests commonly used in the United States to gauge general academic ability; in France the International Baccalaureate exam (le bac) is taken by secondary-school students. Such tests yield a profile of scores rather than a single IQ and are widely used in educational and vocational counseling. Aptitude tests also have been developed to measure professional potential (e.g., legal or medical) and special abilities (e.g., clerical or mechanical). The Differential Aptitude Test (DAT) measures specific abilities such as clerical speed and mechanical reasoning as well as general academic ability.

People encounter a variety of aptitude tests throughout their personal and professional lives, often starting while they are children going to school.

Here are a few examples of common aptitude tests:

- A test assessing an individual's aptitude to become a fighter pilot

- A career test evaluating a person's capability to work as an air traffic controller

- An aptitude test is given to high school students to determine which type of careers they might be good at

- A computer programming test to determine how a job candidate might solve different hypothetical problems

- A test designed to test a person's physical abilities needed for a particular job such as a police officer or firefighter

|

1.3 Developmental assessment and educational assessment – entry level, formative and summative assessments.

Entry level Assessment

The assessment of entry-level students' academic literacy: does it matter? In Higher Education both nationally and internationally, the need to assess incoming students' readiness to cope with the typical reading and writing demands they will face in the language-of-instruction of their desired place of study is (almost) common cause. This readiness to cope with reading and writing demands in a generic sense is at the heart of what is meant by notions of academic literacy. 'Academic literacy' suggests, at least, that entry-level students possess some basic understanding of – or capacity to acquire an understanding of – what it means to read for meaning and argument; to pay attention to the structure and organization of text; to be active and critical readers; and to formulate written responses to academic tasks that are characterized by logical organization, coherence and precision of expression.

Formative Assessment

Formative assessment provides feedback and information during the instructional process, while learning is taking place, and while learning is occurring. Formative assessment measures student progress but it can also assess your own progress as an instructor. For example, when implementing a new activity in class, you can, through observation and/or surveying the students, determine whether or not the activity should be used again (or modified). A primary focus of formative assessment is to identify areas that may need improvement. These assessments typically are not graded and act as a gauge to students’ learning progress and to determine teaching effectiveness (implementing appropriate methods and activities).

In another example, at the end of the third week of the semester, you can informally ask students questions which might be on a future exam to see if they truly understand the material. An exciting and efficient way to survey students’ grasp of knowledge is through the use of clickers. Clickers are interactive devices which can be used to assess students’ current knowledge on specific content. For example, after polling students you see that a large number of students did not correctly answer a question or seem confused about some particular content. At this point in the course you may need to go back and review that material or present it in such a way to make it more understandable to the students. This formative assessment has allowed you to “rethink” and then “redeliver” that material to ensure students are on track. It is good practice to incorporate this type of assessment to “test” students’ knowledge before expecting all of them to do well on an examination.

Types of Formative Assessment

· Observations during in-class activities; of students non-verbal feedback during lecture

· Homework exercises as review for exams and class discussions)

· Reflections journals that are reviewed periodically during the semester

· Question and answer sessions, both formal—planned and informal—spontaneous

· Conferences between the instructor and student at various points in the semester

· In-class activities where students informally present their results

· Student feedback collected by periodically answering specific question about the instruction and their self-evaluation of performance and progress

More specifically, formative assessments:

· help students identify their strengths and weaknesses and target areas that need work

· help faculty recognize where students are struggling and address problems immediately

Formative assessments are generally low stakes, which means that they have low or no point value. Examples of formative assessments include asking students to:

· draw a concept map in class to represent their understanding of a topic

· submit one or two sentences identifying the main point of a lecture

· turn in a research proposal for early feedback

Summative Assessment

Summative Assessments are given periodically to determine at a particular point in time what students know and do not know. Many associate summative assessments only with standardized tests such as state assessments, but they are also used at and are an important part of district and classroom programs. Summative assessment at the district and classroom level is an accountability measure that is generally used as part of the grading process.

Summative assessment is more product-oriented and assesses the final product, whereas formative assessment focuses on the process toward completing the product. Once the project is completed, no further revisions can be made. If, however, students are allowed to make revisions, the assessment becomes formative, where students can take advantage of the opportunity to improve.

Types of Summative Assessment

· Examinations (major, high-stakes exams)

· Final examination (a truly summative assessment)

· Term papers (drafts submitted throughout the semester would be a formative assessment)

· Projects (project phases submitted at various completion points could be formatively assessed)

· Portfolios (could also be assessed during it’s development as a formative assessment)

· Performances

· Student evaluation of the course (teaching effectiveness)

· Instructor self-evaluation

Summative assessments are often high stakes, which means that they have a high point value. Examples of summative assessments include:

· a midterm exam

· a final project

· a paper

· a senior recital

Difference between Formative & Summative Evaluation

|

AREAS |

FORMATIVE |

SUMMATIVE |

|

DEFINITION |

To monitor learning progress during instruction. |

To assess learning progress at the end of teaching. |

|

NATURE |

Ongoing, continuous |

At the end of the instructional process. |

|

PURPOSE |

Feedback to the Teacher and Students |

Assigning Grades, & Extent of achievement of jobs. |

|

TYPE OF TESTS |

Teacher made tests & Observational techniques. |

Rating scale & Evaluation of Projects |

|

USE TO STUDENTS |

a) Information for modifying instruction. b) Prescribing group of individual remedial work. |

a) Certifying pupil’s mastery of the learning outcome. b) Assigning grades. |

|

USE TO TEACHER |

a) Information for modifying instruction. b) Prescribing group of individual remedial work. |

a) Judging the appropriateness of the course objectives. b) Effectiveness of the instruction. |

|

STUDENT- TEACHER RELATIONSHIP |

Daily continuous interaction. |

Delayed instruction. |

|

FUNCTION |

Guiding the development process. |

Making an overall assessment. |

|

EXAMPLES |

Oral questions and observation. |

Terminal Exams. Unit tests. Project Evaluation. Teacher- aid evaluation. |

1.4 Formal and informal assessment – concept, meaning and role in educational. settings. Standardised/Norm referenced tests (NRT) and teacher made/informal Criterion referenced testing (CRT).

Formal assessments have data which support the conclusions made from the test. We usually refer to these types of tests as standardized measures. These tests have been tried before on students and have statistics which support the conclusion such as the student is reading below average for his age. The data is mathematically computed and summarized. Scores such as percentiles, stanines, or standard scores are mostly commonly given from this type of assessment.

Informal assessments are not data driven but rather content and performance driven. For example, running records are informal assessments because they indicate how well a student is reading a specific book. Scores such as 10 correct out of 15, percent of words read correctly, and most rubric scores are given from this type of assessment.

The assessment used needs to match the purpose of assessing. Formal or standardized measures should be used to assess overall achievement, to compare a student's performance with others at their age or grade, or to identify comparable strengths and weaknesses with peers. Informal assessments sometimes referred to as criterion referenced measures or performance based measures, should be used to inform instruction.

![]() The most

effective teaching is based on identifying performance objectives, instructing

according to these objectives, and then assessing these performance objectives.

Moreover, for any objectives not attained, intervention activities to re-teach

these objectives are necessary

The most

effective teaching is based on identifying performance objectives, instructing

according to these objectives, and then assessing these performance objectives.

Moreover, for any objectives not attained, intervention activities to re-teach

these objectives are necessary

Norm Referenced Assessment

Norm Referenced Assessment or Norm Referenced Testing (NRT) is the more traditional

approach to assessment. These tests and measurement procedures involve test

materials that are standardized on a sample population and are used to identify

the test takers ability relative to others. It is also known as formal

assessment.

Norm referenced assessment is defined as a procedure for collecting data using a device that has been standardized on a large sample population for a specific purpose. Every standardized assessment instrument will have certain directions that must be followed. These direction specify the procedure for administering the test and ways to analyze and interpret the results and reporting them. Examples of the more commonly known formal assessment devices are the Wechsler Intelligence Scales for children – Revised (WISC-R), The Illinois Test of Psycholinguistic Ability (ITPA), The Stanford-Binet Intelligence Test and the Peabody Picture Vocabulary Test – Revised (PPVT-R) and Peabody Individual Achievement Test (PIAT).

Advantages of

norm-referenced assessment

Norm referenced tests are widely used in special and remedial education for

many reasons.

— First, the decision of categorizing the children as exceptional or special is mainly based on the test results of NRTs.

— Second, it is easy to communicate test results to parents and others unfamiliar with tests.

— Third, norm-referenced tests have received the most attention in terms of technical data and research. They are specifically useful in problem identification and screening.

Disadvantages

of norm-referenced assessment

The use of norm referenced tests data for the purpose of educational

programming is questioned in many instances for the following reasons.

— Information obtained from norm-referenced testing is too general to be useful in everyday classroom teaching. Many educators disregard the prognosis and interpretative types of data provided by standardized tests because the information is often not directly applicable to developing daily teaching activities or interventions. What does knowing a child’s WISC-R score or grade equivalent in reading specifically tell a teacher about what and how to teach? For instance, what is important is to know whether the child needs to learn initial consonants or is he having difficulty with comprehension.

— NRTs tend to promote and reinforce the belief that the focus of the problem is within the child. It is because the primary purpose of NRTs is to compare one student with another. However, although a child may differ from the norm, the real problem may not be within the child but in the teaching, placement or curriculum. Educators must begin to assess teacher behaviours, curriculum content, sequencing and other variables not measured by norm referenced tests.

Criterion-referenced assessment

(CRTs)

Criterion-referenced assessment is concerned with whether a child is able to

perform a skill as per the criteria set, or not. In contrast to norm referenced

assessment, which compares one persons performance to others, criterion

referenced assessment compares the performance of an individual to the

pre-established criteria. In criterion-referenced test, the skills within a

subject are hierarchically arranged so that those that must be learned first

are tested first. In maths, for example addition skills would be evaluated (and

taught) before multiplication skills. These tests are usually criterion

referenced because a student must achieve competence at one level before being

taught at a higher level.

Advantages of

criterion referenced assessment

The criterion-referenced test results are useful:

— to identify specific skills that need intervention.

— to determine the next most logical skill to teach as the implications for teaching are more direct with criterion referenced tests.

— to conduct formative evaluation, that is, the performance of the student is recorded regularly or daily when the skills are being taught.

This makes it possible to note the student progress, to determine if intervention is effective and to help decide the next skill to be taught if achieved, if not to decide what other strategies or methods and materials are to be used for teaching.

Disadvantages of criterion-referenced assessment

— Establishing the passing criteria for a specific skill is a problem in criterion-referenced testing. For example, if a test were needed to determine, whether student had mastered high school mathematics, there is a problem of determining exactly which skills should be included in the test. Further, should a student pass the test if 90% of the questions are answered correctly or only if 100% are correct? These decisions must be carefully considered, because setting inappropriate criteria may cause a student to struggle unnecessarily with a concept.

— It is difficult to decide exactly which skills should be included in the test.

— There is also a problem that the skills assessed may become the goals of instruction rather than selecting the skills that the child should know. Due to this, the teachers may narrow down their instruction and teach in accordance with what is measured on the test rather than what is truly required for the child to know.

Curriculum-Based Assessment (CBA)

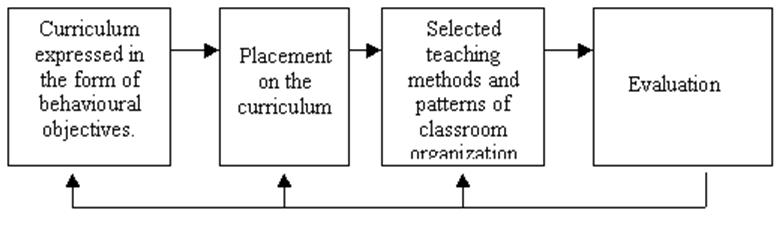

The concept of curriculum based assessment is not new and has been employed for a number of years. CBA has been developed as a means to cope with low-achievers and children with special needs in regular schools. Further, it fits into the non-categorical model that is assessment is focused on testing curriculum-based skills and not on testing for labeling purpose.

The CBA aims to identify children’s educational needs and the most appropriate forms of provision to meet those needs. Sality and Bell (1987) describes educational needs as “behaviours which a person lacks which are necessary in order to function effectively and independently both in the present and in the future”.

The starting point for conducting CBA is the child’s classroom. It is the suitability of this environment and the child’s interaction with it that is assessed and not the child.

Definition:

CBA has been defined by Blankenship and Lilly (1981) (quoted in Sality and

Bell, 1987; pg.35) as the practice of obtaining direct and frequent measures of

a student’s performance on a series of sequentially arranged objectives derived

from the curriculum used in the classroom.

It helps in finding out the current level of a student in terms of the expected

curricular outcomes of the school. In other words, assessment instrument is

based on the contents of the student curriculum. Some types of CBA are

informal, while others are more formal and standardized.

Procedure followed in

developing CBA

- The first stage in the process requires that the curriculum be defined as series of tasks which are sequenced and expressed in the form of behavioural objectives.

- Placement in the curriculum helps to identify which skills have been learned and those which need to be taught in the future. It pinpoint exactly where a child is on the curriculum.

- Selection of suitable teaching methods, materials and patterns of classroom organization for teaching.

- Evaluating children’s progress – relates to the selection of teaching methods, patterns of classroom organization and choice of curriculum.

- Curriculum Based Assessment can therefore, be

seen as a procedure which sets up situations where links are established

between various teaching approaches and pupil progress.

Teacher Made Test (TMT)

A teacher-made test is an alternative to a standardized test, written by the instructor in order to measure student comprehension.

Teacher-made tests are considered most effective when they are implemented as part of the education process, rather than after the fact.

Distinctions between Criterion-referenced and Norm-referenced testing

|

DIMENSION |

CRITERION REFERENCED |

NORM REFERENCED |

|

PURPOSE |

To determine whether each student has achieved specific skills or concepts. To find out how much students know before instruction begins and after it has finished. |

To rank each student with respect to the To discriminate between high and low achievers.

|

|

CONTENT |

Measures specific skills which make up a designated curriculum. These skills are identified by teachers and curriculum experts. Each skill is expressed as an instructional objective. |

Measures broad skill areas sampled from a variety of textbooks, syllabi, and the judgments of curriculum experts. |

|

ITEM CHARACTERSTICES |

Each skill is tested by at least four items in order to

obtain an adequate sample of student The items which test any given skill are parallel in difficulty |

Each skill is usually tested by less than four items. Items vary in difficulty. Items are

selected that discriminate between high

|

|

SCRE INTERPRETATION |

Each individual is compared with a preset standard for acceptable achievement. The performance of other examinees is irrelevant. A student's score is usually expressed as a percentage. Student achievement is reported for individual skills. |

Each individual is compared with other examinees and

assigned a score--usually expressed as a percentile, a grade equivalent Student achievement is reported for broad skill areas, although some norm-referenced tests do report student achievement for individual skills.

|

1.5 Points to consider while assessing students with developmental disabilities.

Assessing students with learning disabilities can be challenging. Some students, such as those with ADHD and autism, struggle with testing situations and cannot remain at a task long enough to complete such assessments. But assessments are important; they provide the child with an opportunity to demonstrate knowledge, skill, and understanding. For most learners with exceptionalities, a paper-and-pencil task should be at the bottom of the list of assessment strategies. Below are some alternate suggestions that support and enhance the assessment of learning disabled students.

To obtain a comprehensive set of quantitative and qualitative data, accurate and useful information about an individual student's status and needs must be derived from a variety of assessment instruments and procedures including RTI data, if available. A comprehensive assessment and evaluation should

1. Use a valid and the most current version of any standardized assessment.

2. Use multiple measures, including both standardized and nonstandardized assessments, and other data sources, such as

o case history and interviews with parents, educators, related professionals, and the student (if appropriate);

o evaluations and information provided by parents;

o direct observations that yield informal (e.g., anecdotal reports) or data-based information (e.g., frequency recordings) in multiple settings and on more than one occasion;

o standardized tests that are reliable and valid, as well as culturally, linguistically, developmentally, and age appropriate;

o curriculum-based assessments, task and error pattern analysis (e.g., miscue analysis), portfolios, diagnostic teaching, and other nonstandardized approaches;

o continuous progress monitoring repeated during instruction and over time.

3. Consider all components of the definition of specific learning disabilities in IDEA 2004 and/or its regulations, including

o exclusionary factors;

o inclusionary factors;

o the eight areas of specific learning disabilities (i.e., oral expression, listening comprehension, written expression, basic reading skill, reading comprehension, reading fluency, mathematics calculation, mathematics problem solving);

o the intra-individual differences in a student, as demonstrated by "a pattern of strengths and weaknesses in performance, achievement, or both relative to age, State-approved grade level standards or intellectual development" 34 CFR 300.309(a)(2)(ii).

4. Examine functioning and/or ability levels across domains of motor, sensory, cognitive, communication, and behavior, including specific areas of cognitive and integrative difficulties in perception; memory; attention; sequencing; motor planning and coordination; and thinking, reasoning, and organization.

5. Adhere to the accepted and recommended procedures for administration, scoring, and reporting of standardized measures. Express results that maximize comparability across measures (i.e., standard scores). Age or grade equivalents are not appropriate to report.

6. Provide confidence interval and standard error of measure, if available.

7. Integrate the standardized and informal data collected.

8. Balance and discuss the information gathered from both standardized and nonstandardized data, which describes the student's current level of academic performance and functional skills and informs decisions about identification, eligibility, services, and instructional planning.